Disclaimer: This isn't going to be a tutorial so to speak. It is my general thoughts on the process and journey building some models.

I wanted to start using Unity engine to leran reinforcement learning for a while. Prior to beginning, I was coding all my learning environments in Pygame. I am going to write another post about all the failures I experienced doing this. It was arduous building out the small word. Pygame also doesn't really handle true physics wellphysics engines I had to import were kind of a nightmare. (Erin Catto did a good job with Box2d. Check it out for your pygame physics needs.) It was an extremely enlightening experience in which my repeated failures (before some eventual success) allowed me to build a toolkit for intuitively understanding RL/ML concepts.

Building the phyics model and the ML model together within the same framework was not the best idea. I couldn't focus on one exclusively, and I would iterate incessantly. The main idea was to get a car to drive around a circular track without hitting the walls. Sounds very simple. Counceptually it is. And in principle it was. But we had to do a ton of conversion from pixel dimensions to meters (because Box2D renders a pixel as 1m). Then scaling the expected motion in m/s to the physical track layout was tricky. All this while trying to render an image and have it update the way we expect.

Aside: When trouble shooting your model, it becomes really helpful to be able to see what your agent is doing, even if it is really fast. Sometimes, you can intuitively see something that is happening much faster than the data at the end of the run will show. That being said, running it visually also makes for a slower training process.

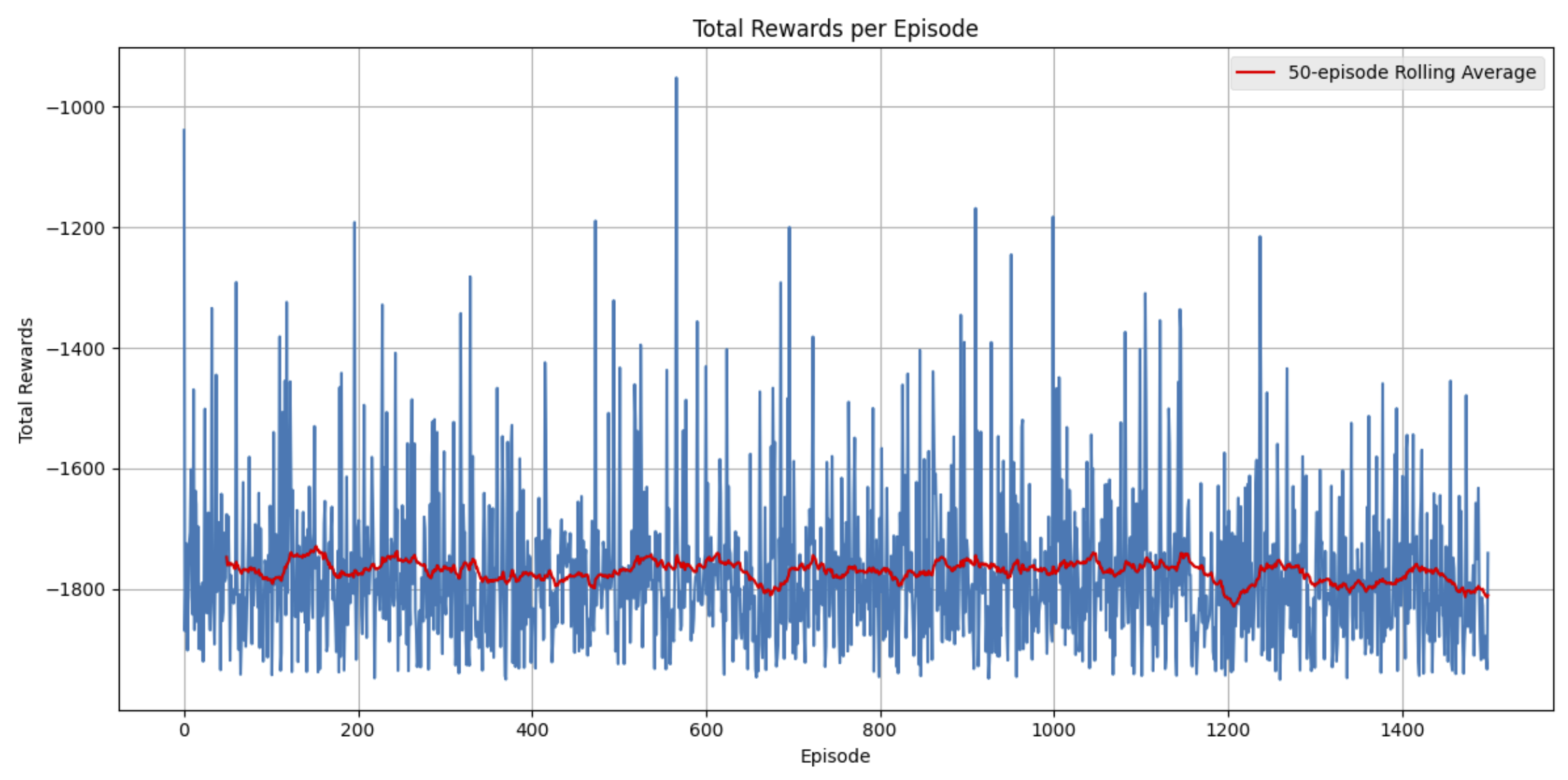

Pygame is also not optimized for a bunch of physics calculations. As a result, there was a bottle neck rendering at speed while learning. That being said, we got some traction and had a car driving around a track. At first, the progress was atrocious. (See below). The data was essentially random. The car would go around the track better, but there were outrageous spikes at the start. I thought I configured PyTorch agent incorrectly, but going back a lot of the issue had to do with the mismatch between the ambition of what I wanted to do with the toolset that I had given myself.

Goals for Unity ML

Oiginally, I wanted to stay away from Unity.I didn't like the shady pricing increases they jammed through about a year ago. Unfortunately, Godot RL-Agents is community maintained. I want to use it in the long run as I support Godot. Right now, I don't want a repeat of trying to make the environment work while I should be refining my models. Unreal Engine don't really have ML models in their stable that are intuitive and friendly to work either. Unity is the most documented and strucutred ML platform currently.

Benefits to Unity ML:

- Build a Repeatable Testing Framework: I made a lot of change to the physics when I coded my environment by hand. Good for learning, bad for reproducibility. Physics works out of the box.

- Rendering Test Environment: Setting up cameras, recording runs and building clean environment to allow me to review more easily (and possibly generate some cool videos)

- Speed Up Training: Multi-Agent Support/Parallel Environments ML allows you to run multiple training instances in one project. I can designate one master environment and copy it multiple times inside same project. This dramatically increases the training speed.

- PyTorch Integration: PyTorch slots in pretty easily and handles all the training on the back end.

- Possible Benefit: I have to learn C# for Unity Coding.

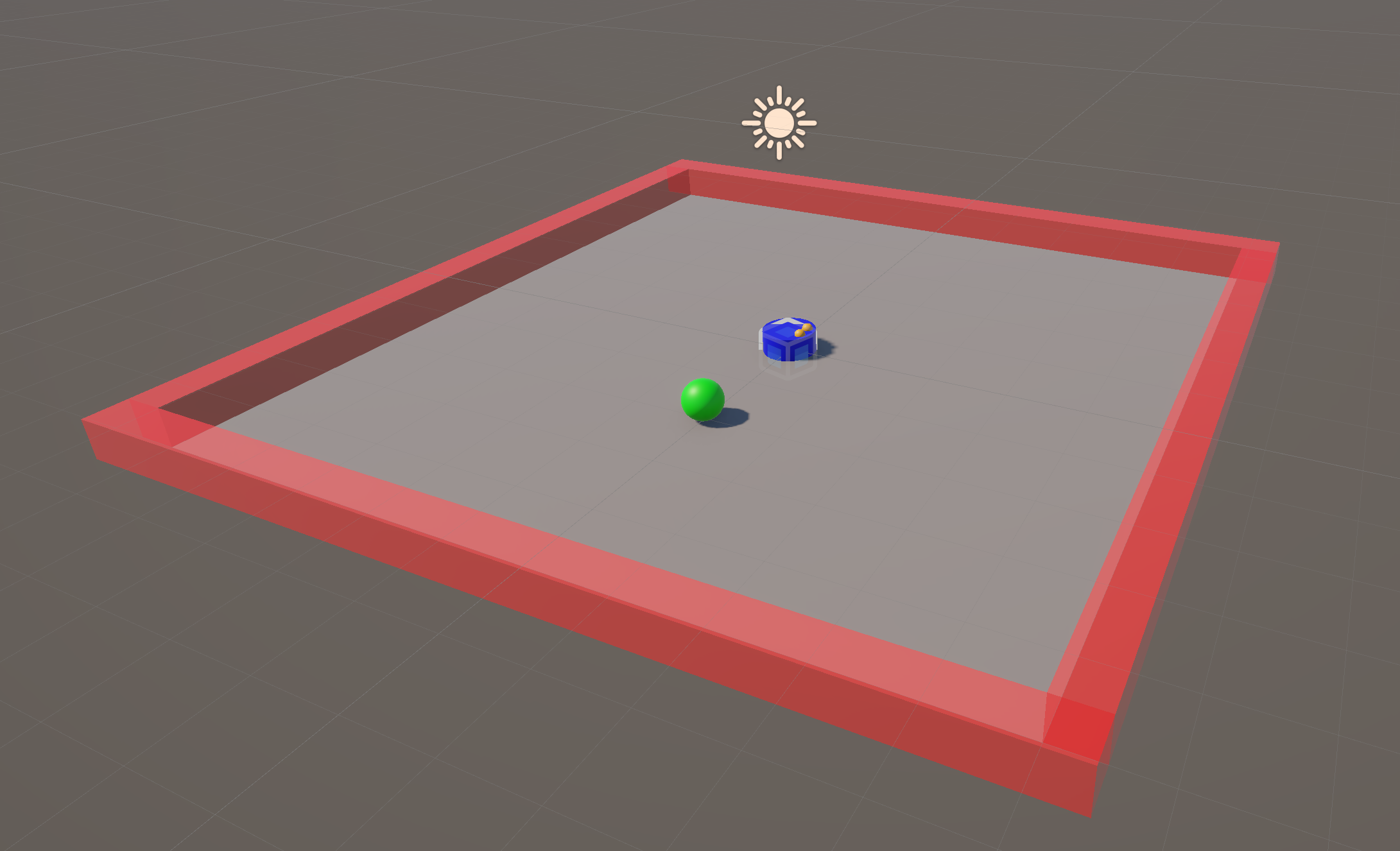

Below is my initial test scene. It is clear and simple. The ML agent is in blue, the goal will be designated in green. The red indicates a penalty area.

The goal for my initial config is to figure out the ropes and build some initial scripting. The agent is going to "look" around with their eyes for the green ball. It makes a move. If it connects with the ball it gets a reward. If it contacts the wall, it gets negative reward for however long it remains in contact. Makes anoter move and tries again. We set a time limit so as not to train indefinately. I am assuming a lot of my C# code is going to be something I can extend fairly easily.

Once I get the basics down, I want to start building some more complex scenarios. The first 2-3 iterations are going to be pushing something towards a goal area, avoiding things while transiting an environment towards goal and then trying to locate another agent which is attempting to hide.

I am hoping that the ML Agents in Unity continues to be straight forward. I am excited to keep this up to date. I am going to record some video of the tests and begin posting on youtube. There is a vibrant and interesting comunity working in this space right now. Please take some time to check it out. It isn't just all linear algebra. Some of the models people are building are in depth and the training along the way is hilarious to watch. I really want to push my model forward and do some interesting things. I find learning in all instances deeply intriguing. I am excited to see where this goes.